|

|

If you’re a technical founder, you’ve likely made calls based on instinct because waiting for perfect data takes too long.

Roadmaps shift. Feedback is slow. Some of your best features started as gut decisions.

This article breaks down how to balance gut instinct with solid proof. You’ll learn when to trust yourself, when to look for data, and how to avoid getting stuck in doubt.

Four experienced founders share real tips from their own journeys with strategy and intuition:

- Vova Feldman, founder and CEO of Freemius

- Cristian Raiber, founder of WPChill

- Patrick Posner, founder of Simply Static

- Derek Ashauer, owner of Conversion Bridge

They’ve built products by mixing vision with data, and share what’s worked for them. Because in a world where even Microsoft ships features no one uses, being strategic and data-driven isn’t always enough.

You need to be direction-driven. So, let’s get into it.

The Reality of Software Business Decisions

Even enterprise giants like Microsoft struggle to predict user needs. Cristian Raiber referenced an executive who admitted that only 10% of Windows features end up being truly useful, despite heavy investment in data and research.

If Microsoft can’t always get it right, what are the odds that a data-only approach will work for your early-stage SaaS?

Cristian puts it bluntly: “I have yet to find someone who’s managed to use just numbers and not their gut feeling as the primary driver of the business. I see gut first and numbers second.”

That doesn’t mean ignoring analytics. But it does mean recognizing their limits.

Data can lag, mislead, or miss key context. A spike in signups might look like a win, until you realize a competitor shut down that same week.

The real insight is this: intuition isn’t guessing. It’s pattern recognition shaped by experience.

Derek Ashauer clarifies this evolution well:

“I used to think that if I just add this feature, it’ll sell more. Now I know that focusing on a marketing task might grow the business more than writing code.”

As your product matures, so does your judgment. That’s why founders often follow a familiar pattern: idea first, data second. Patrick Posner summarizes this well: “I won’t ship a feature unless I’m 95% sure it will have a decent impact. But I still trust my gut in the end.”

The data might confirm your hunch. Or it might not. But great product decisions rarely begin in a dashboard. They begin with direction.

That’s why it’s so easy to get knocked off course by the allure of the new: it looks like progress, but often pulls you away from your strategy.

Shiny Object Syndrome: The Decision-Maker’s Enemy

It always starts the same way: you’re mid-sprint, deep in the roadmap, when a new idea hits your feed. It’s a feature request, a trending integration, or a hot take that gets under your skin.

It feels useful, so you pause your plan, shift gears, and chase it.

This is what Cristian calls out:

“You think this is the best solution, the next big thing. But if you look through the lens of your core goals, wouldn’t it be easier to say no to a bunch of other things?”

That mental tug-of-war is shiny object syndrome. It’s not about bad ideas — it’s about good ideas showing up at the wrong time, with no clear connection to your strategy.

And it’s more common than you think.

“I noticed I’d plan 10 things and end up doing two, plus 20 completely different things instead,” says Vova. “You need a structure that helps you stick with your plan and not let day-to-day intuition take over.”

Vova’s team eventually addressed this by introducing a North Star OKR (Objective and Key Result) to act as a filter.

Every new idea is evaluated based on whether it supports that singular direction. If it doesn’t, it goes on the backlog.

This approach doesn’t just prevent distraction. It forces clarity. When you define success — whether it’s a revenue goal, customer count, or platform adoption target — you can spot when a “great idea” is just noise.

“It’s like defining your values,” Vova said. “Now, every time an opportunity comes up, we ask: how does this serve our OKRs? If it doesn’t, it’s a maybe later — not a yes.”

The key is being deliberate. New ideas will always show up. But without a framework, you’re at risk of what Derek calls the “classic trap”:

“You forget what you planned a month ago because you saw something on Twitter today and now you’re thinking, maybe I should go for that instead.”

The solution isn’t to ignore every new idea. It’s to install a system that helps you pause, assess, and decide intentionally. A clear metric. A specific goal. An agreement with yourself to protect your long-term focus from short-term dopamine.

With the right system, you can stay open to new ideas and stay focused. Let’s look at how to create that balance:

The Experimentation Balance: Patrick Posner’s 30% Rule

You don’t have to choose between strategy and spontaneity. But you do need to protect your roadmap from becoming a graveyard of half-finished side quests.

Patrick shares a tested system for this: 30% of his team’s development time is reserved for experimentation. The remaining 70% stays locked on core strategic goals.

“We do quite a few experimental things,” Patrick explains. “But a lot of them are behind the scenes, not immediately pushed out.”

This buffer lets him explore without derailing the main vision.

This is critical because new ideas will always compete for attention. Without boundaries, they can slowly eat into the time, focus, and budget needed to actually grow your business.

So how do you explore smartly? Set clear boundaries for experiments.

Patrick doesn’t launch everything that gets built. Some features are tested in private betas for six to ten months before they’re even considered for release. Why? Because support is expensive. Shipping prematurely means committing to maintenance, onboarding, and potential churn.

“I won’t ship a feature unless I’m 95% sure it’ll have a decent impact,” he said. “It hurts to scrap something that cost $5k to build, but it’s better than spending months supporting something that never moves the needle.”

This approach helps him avoid costly dead-ends. For example, his team tested integrations with GitLab and Bitbucket based on user requests, but pulled the plug after realizing the API instability would create more support load than business value.

On the other hand, his GitHub integration — despite being “a complete mess” to maintain — is used by 60% of Simply Static customers. It stayed, not because it was fun to build, but because the payoff was clear.

Use your gut, but test with structure.

Intuition still plays a role, especially when data is limited or inconclusive. But Patrick doesn’t rely on feelings alone. He combines gut instinct with user feedback, beta testing, and real-world usage before committing.

You can apply the same principle:

- Set a time or resource limit for testing new ideas (Patrick uses monthly cycles)

- Run small, controlled betas with real users. Gather feedback early

- Evaluate experiments against a simple question: Will this improve revenue without increasing support burden?

- Track outcomes, even if loosely. Did the experiment generate backlinks, user retention, upgrades, or product insights?

Know when to kill it.

Because not every idea works. And not every sunk cost is worth defending. If a prototype shows weak signal, high friction, or ongoing headaches, it’s okay to cut it loose.

“Sometimes I just scrap a prototype,” Patrick said. “It’s better than wasting more time maintaining something that’s not worth it.”

When you protect a portion of your time for experimentation and set standards for what goes into your main product, you stay open without getting lost.

Let’s look at how to build a decision framework that integrates intuition, data, strategy, and structure without overcomplicating the process.

The Subjective Nature of Data Interpretation

In theory, data gives you a neutral way to make decisions. In reality, data is as objective as your interpretation lens, which is shaped by your goals, your experience, and, yes — your biases.

Cristian put it simply:

“If I were the one who made up the way we measure success, I would find a way to look at it and consider if we’re having success or not.”

Now, what traps may you fall into?

Confirmation Bias

Even clean charts and clear metrics can quietly reinforce what you already want to believe. If you’re the one defining what to measure, it’s dangerously easy to also define what success should look like.

This is known as confirmation bias — seeing what you expect to see.

Think about it: if you’re tracking “user engagement” but only measuring time spent in your app, you might miss that users are struggling to complete basic tasks. High engagement could actually mean confusion, not satisfaction.

Cristian notes that “most of us will try to convince ourselves our idea is good because we look at the numbers.” When you want something to be true, you’ll unconsciously cherry-pick the metrics that support your belief.

Derek offers an example from his experience: “I have people telling me ‘this sounds like a great plugin, I love it.’ But when it’s time to pay, nothing. So even validation data can be misleading.”

User signups, survey responses, or website visits might feel like traction. But none of it matters if those signals don’t turn into actual behavior — purchases, renewals, retention.

The Context Gap

Here’s where things get tricky: data rarely comes with built-in context. You see a 20% drop in conversions, but the numbers don’t tell you:

- Your biggest competitor just launched a free tier

- There’s a holiday affecting your target market

- A recent blog post is driving unqualified traffic

- Your payment processor had technical issues

Patrick saw this firsthand.

In December, Patrick’s numbers showed a massive spike in new customers. His initial reaction was: “Oh, we must have done something incredible.” He assumed it was thanks to the shiny update — until he checked the news and realized a major competitor had shut down.

“Turns out people just needed a replacement. It wasn’t about the feature at all.”

The data was accurate: signups were up. But his interpretation was completely wrong. Without the context of his competitor’s shutdown, he would have credited a feature that had nothing to do with the growth.

Without context, you might invest more resources into that feature when the real driver was something completely unrelated.

So how do you avoid drawing the wrong conclusions from the right numbers?

- Start with a clear question: Are you trying to validate an idea? Understand user behavior? Measure the impact of a feature? Know why you’re looking at data before you dive in.

- Use multiple data points: Don’t rely on one metric. Pair product usage with churn rates. Combine traffic spikes with support ticket trends. Look at behavior, not just opinions.

- Compare expectations to outcomes: If you shipped something expecting it to increase conversions — check if it actually did. Not just signups: revenue, retention, engagement.

- Stay humble about what you don’t know: As Cristian reiterates, even juggernaut companies like Microsoft, with vast data infrastructures, get it wrong when launching features. Assume you’re missing something, and validate accordingly.

Data is essential. But if you’re not careful, it will simply help you justify decisions you’ve already emotionally committed to. Instead, use it to challenge your assumptions — not just confirm them.

Pro tip: Before you look at your metrics, write down what you expect to see and why. This forces you to acknowledge your assumptions upfront, making it easier to spot when you’re bending the data to fit your expectations.

Up next: how to blend instinct with insight, so you can move fast without losing direction.

Building Your Decision Framework

The most successful software makers have learned to create systems that help them say “yes” to the right things and “no” to everything else.

Find Your North Star

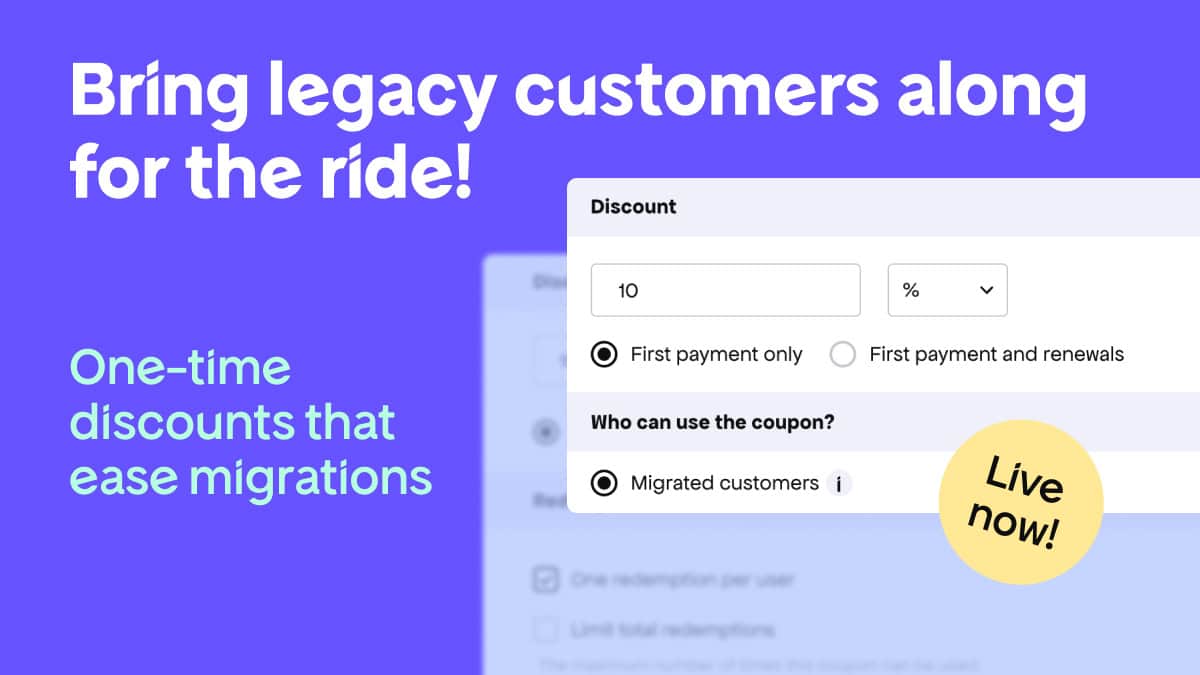

Your decision framework starts with defining what actually matters for your business. As Vova emphasised earlier, setting up clear OKRs (Objectives and Key Results) was transformative for Freemius:

“It really helps to hyperfocus and also navigate past those shiny things because they’re coming at us every day, all the time.”

Set a North Star metric by asking: What specific outcome do I want to achieve in the next 12 months? Make it measurable, not vague. Then work backwards.

But here’s the key: your North Star can’t just be “make more money.” You need something more specific that connects to how you’ll actually achieve that revenue growth.

For Patrick Posner, that North Star is year-over-year MRR growth, with a clear subgoal: expanding his product’s reach to less technical users. Every initiative gets measured against that. If it helps broaden the user base without overwhelming support, it’s worth considering.

“MRR growth is our number one metric,” he said. “But we also want to make Simply Static easier to use — more tutorials, more automation, fewer support requests.”

Use the Filter System

Once you have your North Star, you need practical filters for day-to-day decisions. Vova explains: “Every time there is something that catches your interest, take a step back and ask yourself, ‘Wait, how does it serve us when it comes to the OKRs?’ It’s much easier to put those on a backlog when you have that very specific plan.”

You can build a lightweight version of this filter. For each new idea, ask:

| Question | Pass/fail |

| Supports North Star? | ✅ |

| Validated through real behavior? | ✅ |

| Easy to test or low cost to scrap? | ✅ |

| Won’t break onboarding/support flow? | ✅ |

If the answer to most of those is “no” or “not now,” it’s likely a distraction.

Derek points out the challenge: “When do you listen to your gut or your overall strategy?” The answer isn’t to always choose one over the other, but to have a process for making that choice consciously.

Make Space for Experiments (But Contain the Risk)

New ideas aren’t bad. But without boundaries, they can hijack your strategy. We mentioned Patrick’s 30% rule as a practical solution: allocate a fixed portion of your time or resources to test ideas without letting them overtake your roadmap.

This allows you to explore, learn, and pivot, all while protecting your core progress.

And that’s the point of a decision framework: it helps you trust your gut without losing control. You’ll still follow instincts — but they’ll be filtered through goals, tested with data, and anchored in a direction you’ve defined ahead of time.

Don’t Neglect Your Gut

Even with filters and testing, Patrick admits that “intuition and gut feeling is like the last instance.” After all the analysis, you still need to make a judgment call about whether something feels right.

This isn’t anti-data – it’s recognizing that data has limits.

As Derek notes: “You have to leave some room for intuition to take some of those risks because you could come up with a strategic plan, but then if you’re not willing to try some new things, you could all of a sudden be like, ‘Well, we’re not going to do anything with AI right now because we’re sticking to this plan we created long ago when AI wasn’t even a thing.’”

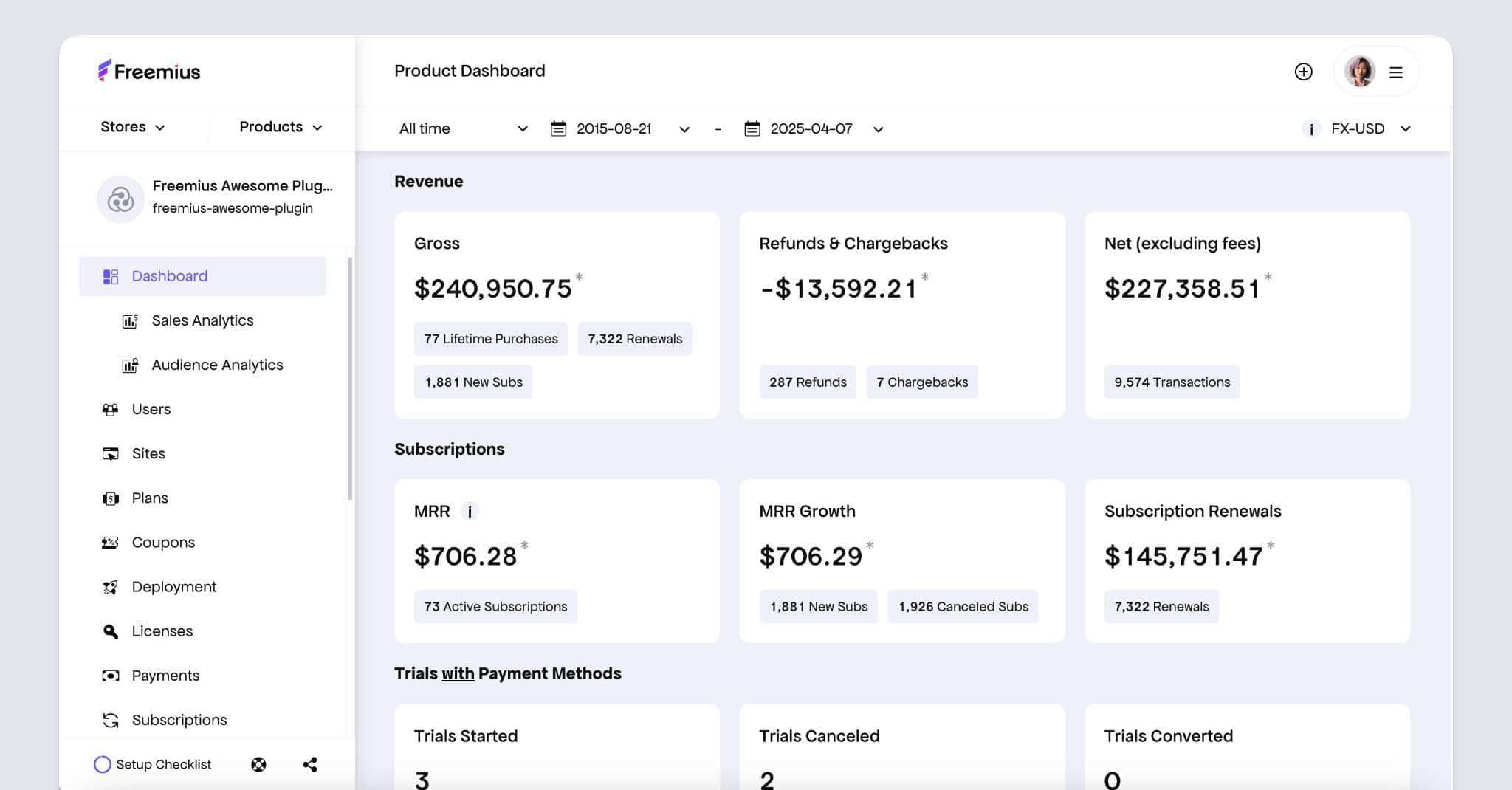

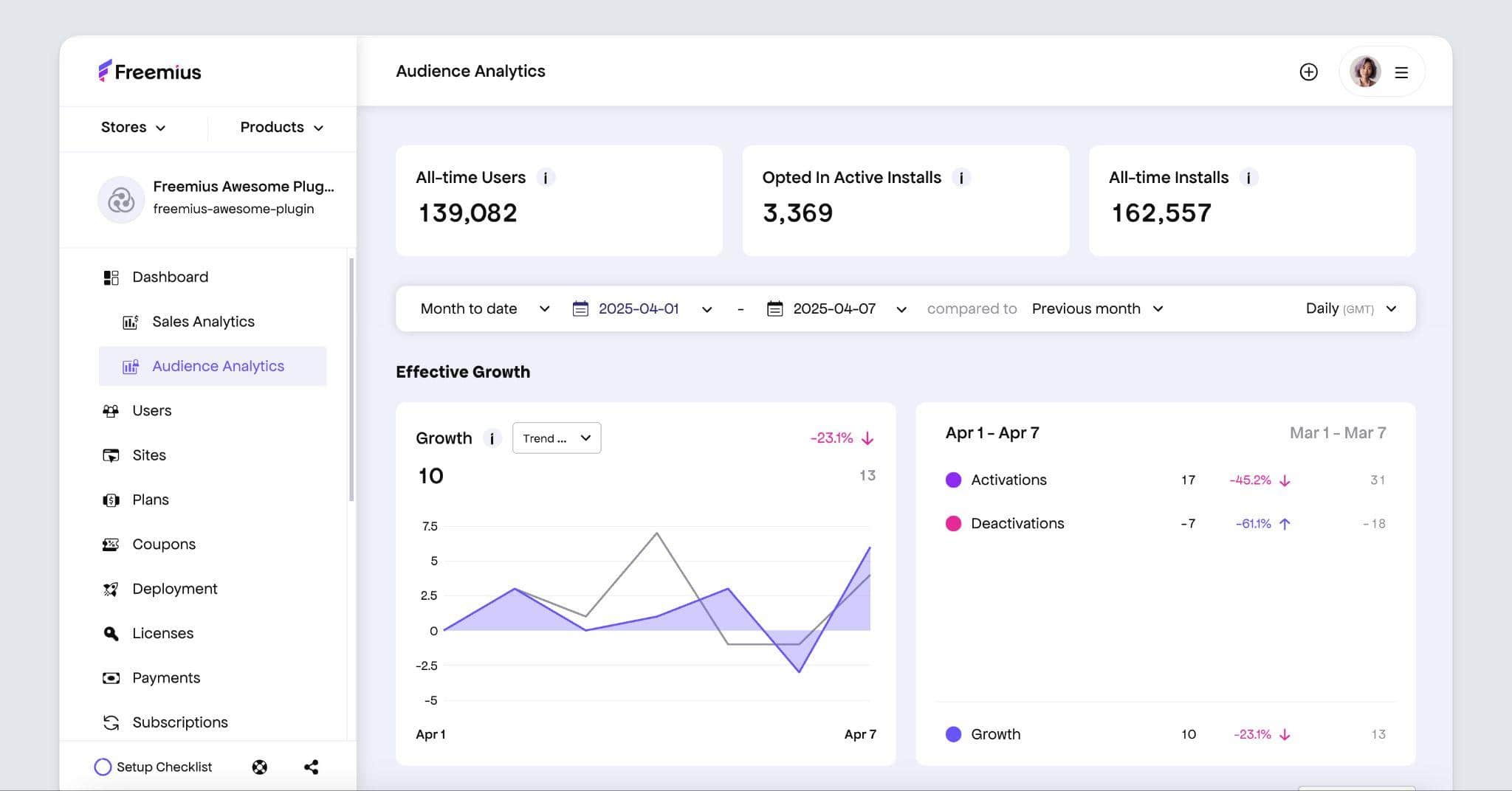

The Freemius Advantage: MoR Solution with Context-Aware Analytics

As a software maker, you don’t just need traffic stats or revenue graphs — you need data that makes sense in the context of how your product is sold, supported, and used.

That’s where Freemius stands apart.

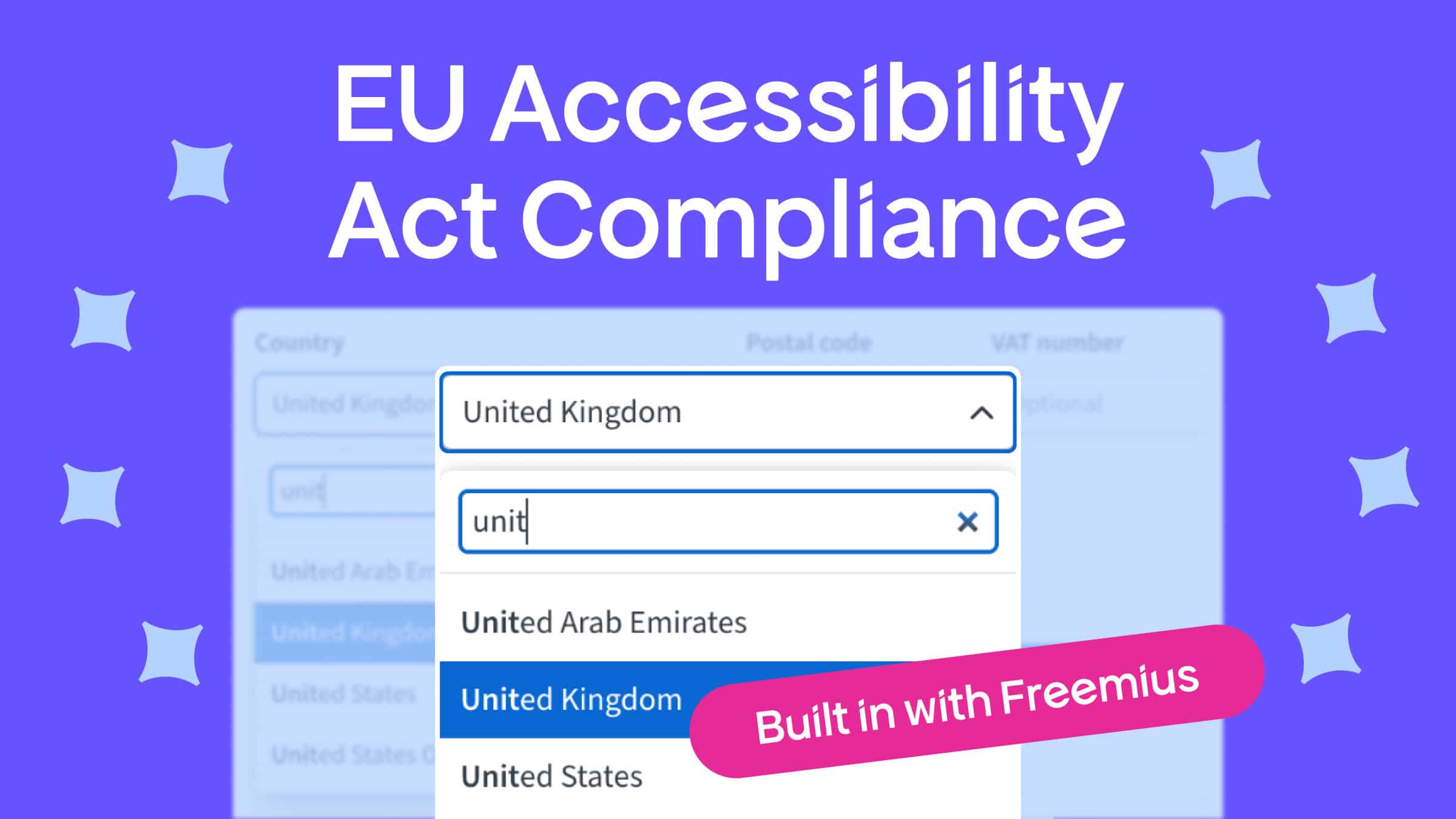

Freemius is a merchant of record (MoR) and was built specifically for selling software (SaaS, apps, plugins, themes). This focus shows up in many of our features, like subscriptions management, tax compliance, or cart abandonment recovery. It also shows in how we structure metrics and present insights that align with your real business model.

If you’ve ever looked at a bump in sales or signups and still had no idea why it happened, you’re not alone. Even experienced founders like Patrick Posner and Cristian Raiber have been there.

Freemius, among other things, helps prevent these kinds of false conclusions by embedding product-aware context into its analytics. You’re not just seeing your revenue, you’re seeing:

- How trial conversion rates change after a pricing update

- How feature usage correlates with retention

- How specific cancellation reasons trend over time

- Whether churn is due to pricing, support, UX, or market shifts

You get user-level data, segmented by license type, subscription period, geography, and more, so you can trace business outcomes back to product and marketing decisions with fewer assumptions.

If you’re using OKRs to stay focused, your metrics need to connect directly to those goals. The Freemius dashboard helps with that:

- Set MRR growth as your primary KPI and watch it in real-time

- Monitor refund trends following a price increase

- See how A/B tests on pricing, checkout flows, or upsells impact key metrics over time

It’s not about replacing your judgment. It’s about giving you fast, contextual signals to validate or challenge your assumptions.

Start With Intuition, Validate With Data

Data-driven decisions sound great in theory. But as you’ve seen from experienced founders like Vova Feldman, Cristian Raiber, Patrick Posner, and Derek Ashauer, the reality is more nuanced.

Data doesn’t decide — founders do. But without direction, your data just justifies what you already want to believe.

Here’s what to take with you:

- Start with intuition, but don’t stop there. Use your experience and gut to identify opportunities, then validate them before scaling.

- Define your North Star. Whether it’s MRR growth, user activation, or support reduction, set one goal that guides all decisions.

- Build a decision framework. Use simple filters to evaluate new ideas and contain experimentation within safe boundaries.

- Watch for data traps. Know that interpretation is subjective, and bias is always a risk, especially when the numbers seem to support what you already want to believe.

- Use analytics that reflect your reality. Tools like Freemius help you connect product performance to real business outcomes, without the noise.

What are your next steps?

- Audit your current decision-making process. Are you chasing too many ideas? Lacking clear metrics? Start fixing that.

- Pick one core metric to focus on this quarter. Make it visible to your team. Filter every new opportunity through it.

Because the goal isn’t just to be “data-driven.” It’s to build with intention. To move with clarity. And to know, with confidence, when to follow the numbers, and when to follow your gut.

Want to dig deeper?

Check out Freemius’s blog for more insights on product strategy, monetization, and decision-making best practices tailored to software makers.

Let your next decision be a better one!