|

|

After launch, many SaaS founders assume growth comes from shipping more features.

New capabilities get added, integrations move up the list, edge cases and “nice-to-have” improvements start feeling important.

The product does get better, but it also gets broader.

Over time, breadth adds friction: more features to explain, more use cases to support, and more paths for users to navigate.

As a result:

- New users take longer to understand what the product is for

- Pricing is less anchored to an actual outcome

- Upgrading isn’t the obvious next step

And when users don’t move forward, revenue doesn’t either. Not because the product lacks value, but because users struggle to see it and act on it.

We’ve seen this pattern repeat across post-launch SaaS teams and heard the same tension from founders who kept shipping, but couldn’t get revenue to follow.

This article breaks down what they learned on the other side — why building more didn’t fix growth and which levers finally did.

Why “More Features” Fails as a Growth Strategy After Launch

When revenue flatlines, it’s often because users don’t reach the “aha” moment — or the upgrade path — fast enough.

Post-launch revenue problems usually fall into four buckets:

1. Value clarity

New users can’t immediately tell what problem your product solves or why it’s better than alternatives. When value isn’t obvious, users hesitate (or leave before they experience real value).

Example: A landing page lists 12 features, but none explain the outcome clearly. Users sign up, poke around, and leave before reaching the moment where the product actually helps them.

2. Pricing and packaging

Your plans don’t reflect how people actually use the product. Important value is buried, limits feel arbitrary, or the upgrade path isn’t self-explanatory.

Example: Power users and casual users are on the same plan, paying the same price. Smaller customers feel it’s too expensive, larger ones feel they’re getting a bargain.

3. Trials that don’t prove value

Users get access, but not guidance. They click around, explore features, and leave without experiencing a meaningful outcome worth paying for.

Example: A 14-day trial unlocks everything, but nothing nudges users toward completing a meaningful workflow. They explore features, never hit an “aha” moment, and churn when the trial ends.

4. Upgrade timing

You ask for payment too early, too late, or without context. Prompts appear while users are still exploring — not when they’ve hit a limit or recognized the value.

Example: Upgrade prompts trigger on day three of signup, before users have sent a campaign, shipped a project, or seen a real result, so the display feels premature and easy to ignore.

This is where many founders go wrong: they try to fix all four by building more features.

See also: How to Increase LTV (and Revenue per User) Without P*ssing Customers Off

The Cost of Feature Sprawl

Overbuilding fails by making your product harder to understand, more expensive to operate, and more difficult to monetize.

Complexity Dilutes Perceived Value

The more capabilities you pile on, the harder it becomes for a new user to answer “Is this product for me?”

Phil Portman ran into this when TextDrip (an SMS marketing tool for sales and support teams) kept adding features to support different use cases. With everything included in a flat, unlimited plan, core value got lost and customers couldn’t easily tell which features mattered for their situation.

Once the team grouped the features around usage and outcomes (and clearly explained who each tier was for), customers could immediately see where they fit.

The result: Better understanding and 3x more upgrades.

Maintenance Costs Compound Faster Than Revenue

Every feature adds ongoing tax: support, documentation, bugs, and operational overhead. That cost grows whether users pay for it or not.

At Snipcart, founder François Lanthier Nadeau discovered that roughly 80% of users paid nothing while consuming the bulk of support resources.

The fix: A simple $10 minimum monthly fee for low-volume users.

They lost ~2,000 non-paying accounts (nearly half their user base), but revenue tripled in following months.

Broader Feature Sets Lead to Underpricing

When your product does “a bit of everything,” pricing becomes vague. As features stack, value gets harder to explain, costs compound, and it becomes increasingly difficult to justify why one tier is worth more than another. The result is usually the same: you undercharge by default.

After migrating to Freemius, founder of Divi Kingdom Abdelfatah Aboelghit reviewed how customers were using and paying for the product. In a pricing discussion with Freemius CEO Vova Feldman, it became clear that the product delivered significantly more value than its price reflected.

After adjusting pricing and switching to subscriptions, revenue increased by 57% in the first month.

So if features aren’t the answer, what is?

The Revenue Levers Founders Underuse

Pricing, packaging, trials, and upgrade timing often move revenue faster than new features. Yet many founders set them at launch and leave them untouched.

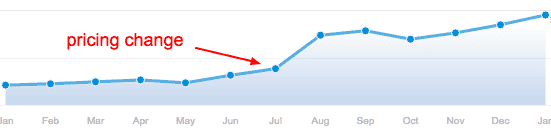

Pricing as a Growth Tool

A single pricing change can outperform months of feature development because it affects revenue immediately, while new features only pay off if users notice and use them.

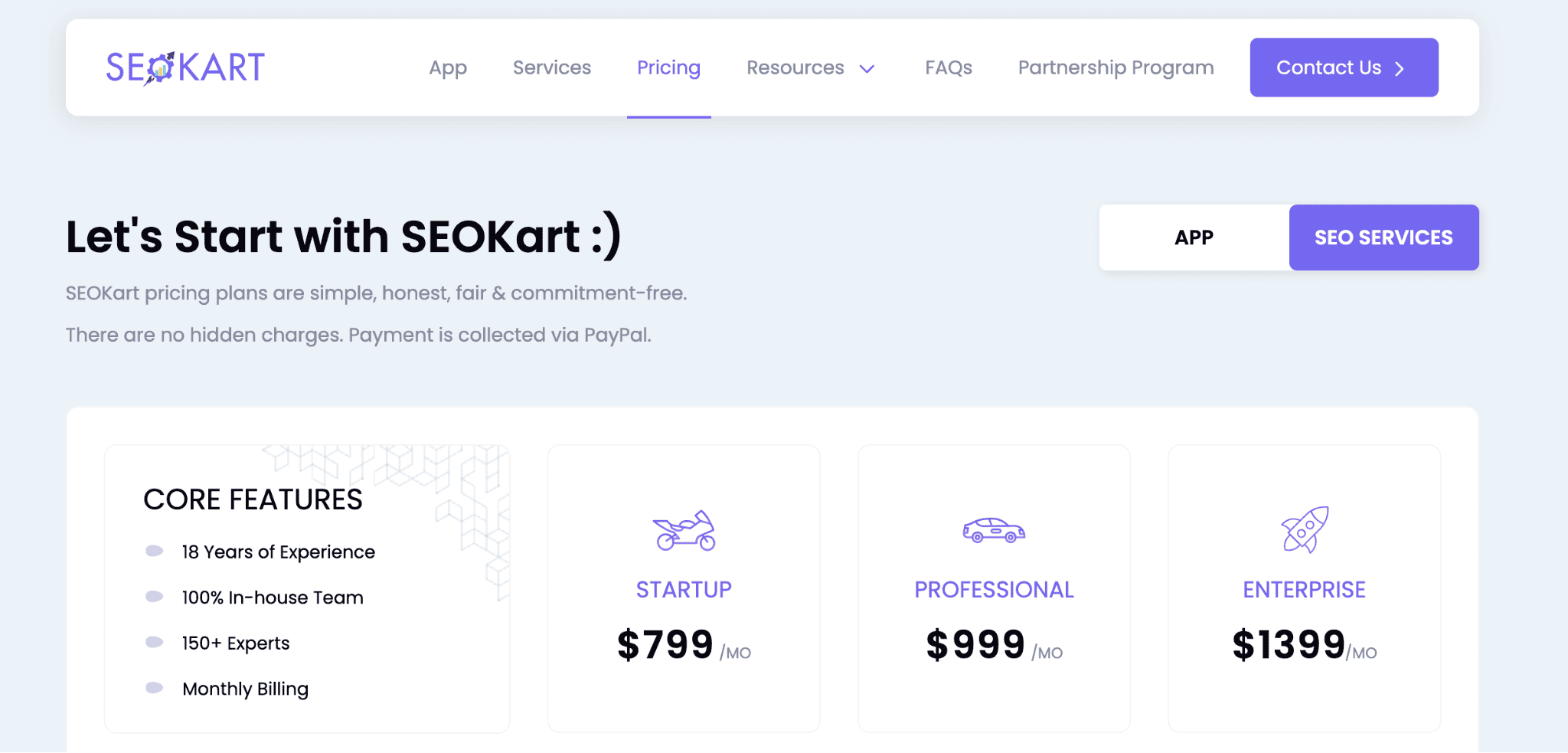

At SEOKart, smaller merchants felt they were paying for more than they needed while larger customers were getting more value without paying proportionally.

Instead of building more features, the team moved from a flat monthly fee to three tiers aligned with customer size and usage (Startup, Professional, Enterprise).

Lower tiers capped keyword research, audits, and backlinks, while higher tiers unlocked more URLs, content optimization, and link volume as merchants scaled.

Within 90 days:

- ARPU increased by 20%

- Churn among smaller merchants dropped by 15%

Packaging Features Around Outcomes

Plan names and framing often sell better than feature lists.

Nicky Zhu, AI Interaction Product Manager at Dymesty, spent six months shipping 16 new features for a wearable analytics product. The assumption was that more power would naturally drive upgrades.

But usage data told a different story. Only 8% of paying customers were actually using the advanced dashboards.

Here’s what happened after they paused feature development and reworked plan structure. Instead of four feature-heavy tiers, they collapsed pricing into two plans, redesigned onboarding around the core workflow, and positioned upgrades around outcomes.

The impact was immediate:

| Before | After | |

| Upgrade rate | 12% | 31% |

| Churn rate | 7.2% | 2.8% |

| MRR | $3,400 | $8,100 |

See also: How to Create Educational Content Around Your Product Features

Trials That Prove Value, Not Just Give Access

A trial that doesn’t guide users to a meaningful outcome quickly doesn’t convert.

ScrapingBee offers 1,000 free API calls without requiring a credit card. For a developer tool like this, asking for payment details upfront would have stopped most developers from making a first request.

“Developers don’t necessarily have access to company credit cards,” explains co-founder Kevin Sahin. “If the developer has to ask for a credit card, they’re not going to try the service.”

Data from Freemius shows that 70.6% of SaaS products offering trials require a credit card, but that approach only works when time-to-value is very short. If users need time or setup to reach value, freemium or usage-based trials convert better.

Upgrade Timing Tied to Usage

Upgrade prompts work best when they appear as users hit a limit, such as trying to publish one more post or run one more report.

Liran Blumenberg, solo founder of FB Group Bulk Poster, put this timing strategy to the test.

| Before | After |

|

Upgrade prompts on login, after every second post, and in settings. Conversion rate: 9%. |

Upgrade prompt when someone clicks to publish their 6th post. Conversion rate: 15% |

This is where testing these growth levers comes into play.

Running Revenue Experiments Without Writing New Code

Most of these experiments take just a few hours to set up. Here’s where to start.

Test Pricing Tiers and Plan Structures

Pricing is the fastest lever you control. Small changes directly affect who pays, when they pay, and how long they stay.

Start with what’s easy to change: price points, plan names, which features sit in which tier, and billing cycles.

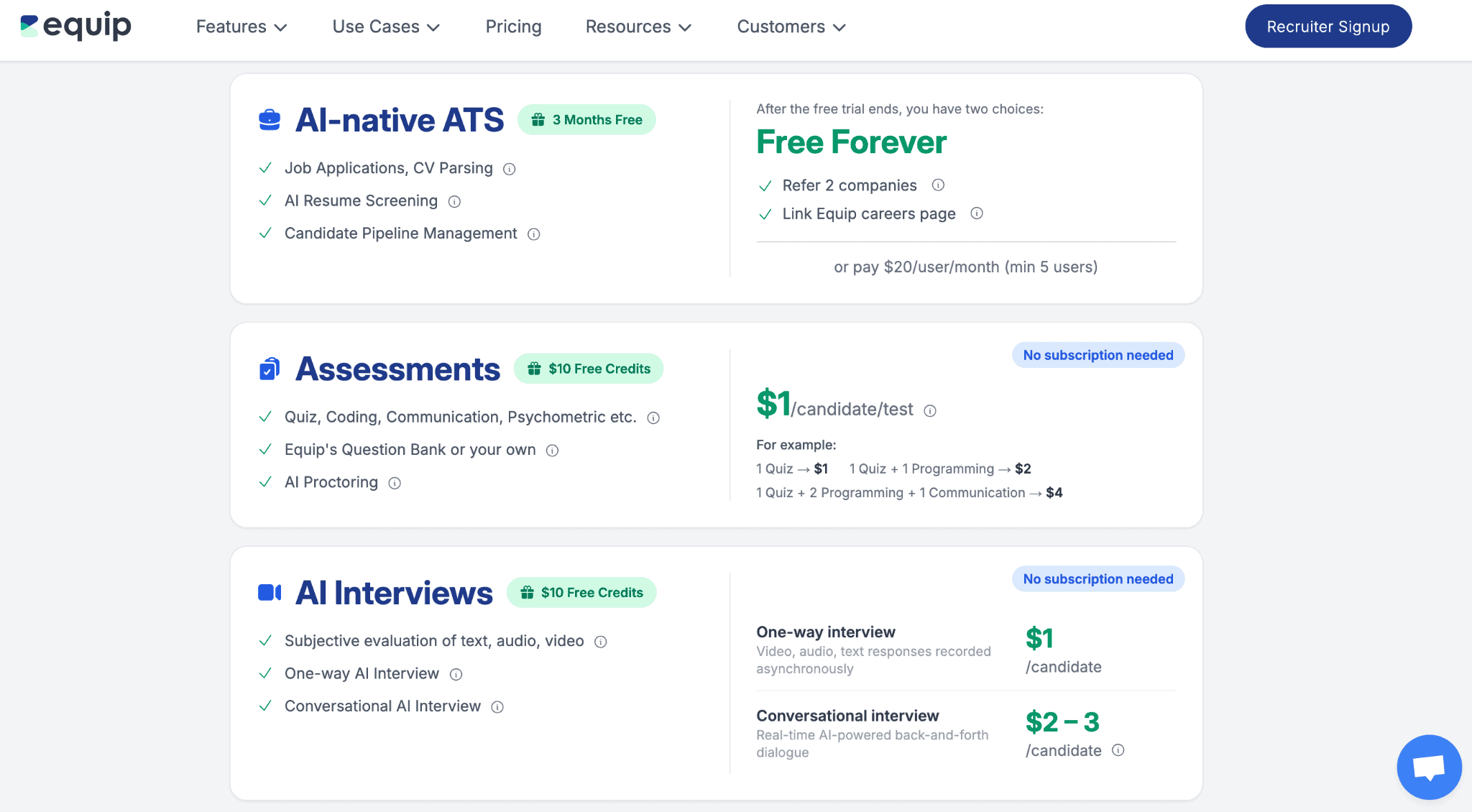

Jayanth Neelakanta at Equip (candidate shortlisting platform) dropped subscription tiers entirely and moved to usage-based pricing.

“Customers were already treating us like a usage-based product. They’d sign up when they were hiring, churn when they weren’t, then come back later. The subscription model showed 40% churn only because the pricing didn’t match how the product was used,” he explains.

Once pricing aligned with real behavior, conversion climbed from 5% to 38% of signups paying within a year, and ARPU increased 67%.

Use Trial Structure to Reduce Friction

What users accomplish during a trial matters more than how long it lasts.

Liran tested 3-day, 7-day, and 14-day trials. Conversion jumped only when he switched from time-based limits to usage-based limits.

Finding the right number took experimentation. Turns out, 6 posts was just enough to complete one campaign and feel the time savings.

Tip: Identify a complete workflow your product enables. Set the trial limit just past that moment — enough to feel value, not enough to avoid paying.

Identify Upgrade Moments Through Usage Patterns

Upgrade prompts work when they appear at moments of intent, not exploration.

Sanjeev Kumar, founder of OurNetHelps, noticed that his most active users were still on free plans.

“I had bundled too much value into free, and upgrade prompts appeared when users were still exploring, not when they hit a real limit,” he says.

To fix that, he:

- Repackaged around jobs instead of feature lists

- Introduced usage-based limits tied to outcomes

- Moved upgrade prompts to the moment users completed a task

Tip: Identify the action that separates users who convert from those who don’t and place your upgrade prompt immediately after that action.

What to Measure Instead of Feature Adoption

Feature adoption feels comforting to track, but it’s rarely predictive. Instead, focus on signals tied to money:

- Trial-to-paid conversion by cohort

- Revenue per user by acquisition channel

- Time from signup to first meaningful action

Liran’s turning point came when he started reading his actual data.

My trial-to-paid conversion rate was abnormally high for SaaS. When I dug into who was converting, I saw a pattern and realized I didn’t need more features for the people already in my funnel. I needed more of those same people.

That’s the kind of insight you’re looking for — not a new idea to build, but a clearer picture of who converts and why.

Takeaway: Before adding anything new, pick one metric, pull the data, and look for a pattern. If something is already working, double down on it.

That doesn’t mean you should never build new features. Just be more strategic about what and when.

See also: Intuition vs Data-Driven Product Development

When Building New Features Makes Sense

Features make sense after pricing, trials, and upgrade timings are showing results.

Only then does feature work start to compound. But before anything goes on the roadmap, run it through two filters.

Filter #1: Does Revenue and Usage Data Support It?

A feature earns its place when there’s evidence it will increase ARPU, reduce churn, or unlock a higher-value tier.

Swashata Ghosh, VP of Engineering at Freemius and founder of WPEForm, learned this by making the wrong call first. Faced with repeated requests, he chose to implement Stripe Subscriptions instead of a cost calculation feature customers were asking for.

“Stripe Subscriptions didn’t boost sales. Only after adding the cost calculation feature did I see real results.”

Today, Swashata tracks requests systematically instead of relying on gut feel.

We track user feedback in GitLab, using a system to keep score. Each time a request comes in, we add a +1. Over time, this reveals the most in-demand features.

ScrapingBee applies a similar discipline, with an added focus on operational cost. Before building anything, they ask:

- How long will it take to build?

- How much will it cost to run and maintain?

- How many users need to adopt it before it’s considered successful?

“If a feature is hard to maintain and no one’s using it, we don’t have a hard time scrapping it,” Pierre de Wulf adds.

See also: How to Prioritize Features in Your Software Product Roadmap

Filter #2: Who’s Actually Asking?

Not all feature requests carry the same weight.

While requests from paying customers tend to reflect real usage and friction, those from free users, churned accounts, or edge cases often come with promises to upgrade — but those promises rarely turn into payments.

Treating every I’d upgrade if you added X as intent is a common mistake.

Arvid Kahl, co-founder of FeedbackPanda has been there: “In a prior startup, I built several features for a prospective customer who promised to sign up if only those features were implemented. Of course, they never did.”

A good counterexample is Bake That Batter.

Tiago Alves built the product alongside a small group of bakers who depended on it daily after their existing software shut down. When paid plans launched, 125 out of 150 active users immediately subscribed.

The contrast between Arvid and Tiago highlights the real challenge: separating genuine usage-driven demand from speculative intent.

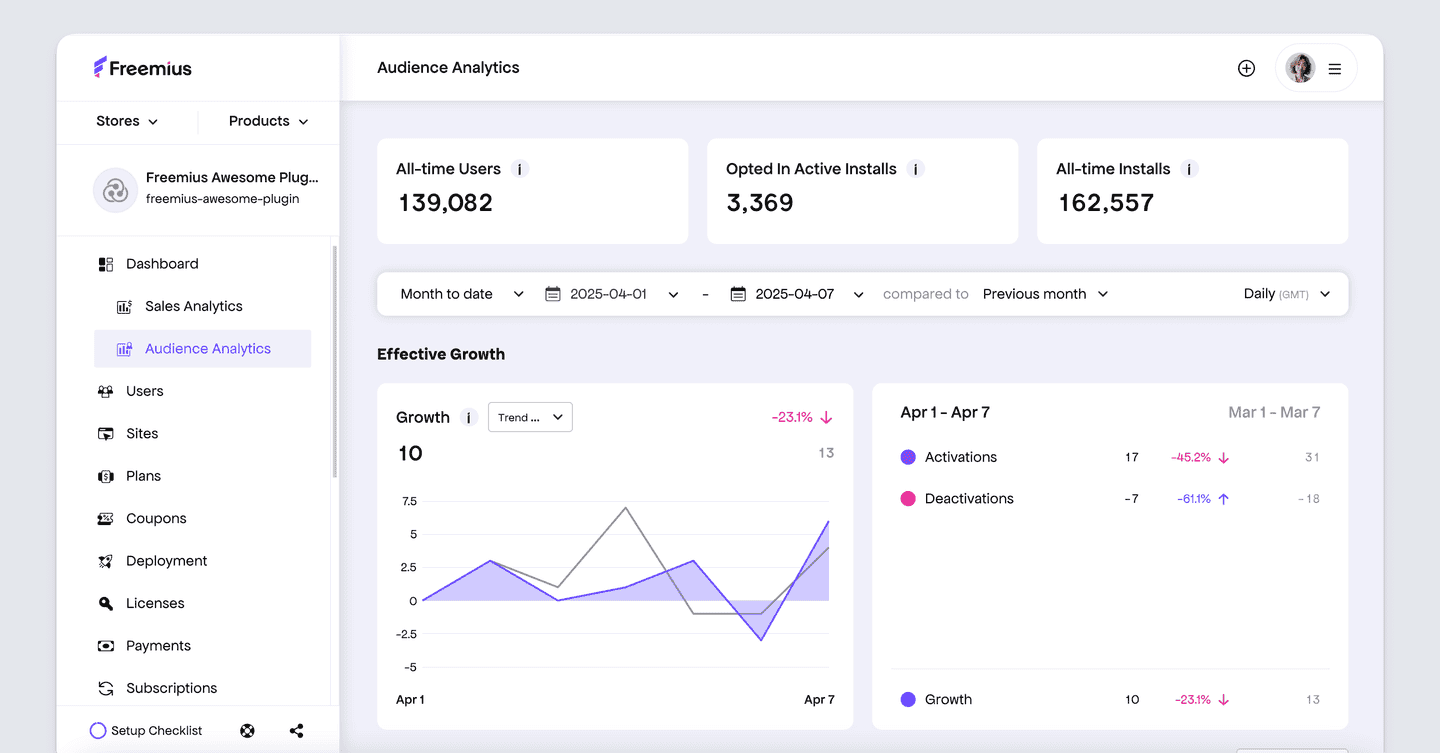

Freemius as the Revenue Signal Layer

Every experiment in this article depends on visibility. Without it, founders default back to shipping features because it’s the only progress they can measure.

Freemius provides that missing signal layer by connecting product behavior to revenue outcomes. It shows where users abandon checkout, which trial structures convert, and how pricing changes affect churn and LTV in real time.

That visibility makes it easier to:

- Track subscription behavior: Revenue by cohort, churn patterns by plan type, and LTV trends by acquisition source, so you understand which users stick and why.

- Test pricing in hours: Adjust plans, trial lengths, and billing cycles from the Developer Dashboard without code changes or deployments.

- See revenue impact before building infrastructure: Get clear data without custom analytics setup, so engineering time stays focused on product.

- Automate the revenue recovery basics: Trial expiration emails, failed payment dunning, and cart abandonment sequences run automatically behind-the-scenes.

Instead of guessing what to build next, you can learn what actually works, then invest in features that support it.

Grow With What You’ve Already Built

Every founder in this article hit the same wall: shipping features that didn’t move revenue. The breakthroughs came when instead of building, they started experimenting with pricing, trial structures, plan packaging, and upgrade timing.

You don’t need another feature to grow. You need visibility into what’s already working — and the ability to test what isn’t.

If you’re stuck shipping features while revenue stays flat, or unsure which lever to pull next, the answer probably isn’t in your backlog.

Book a discovery call with Freemius founder and CEO Vova Feldman to talk through your product, your pricing, and how to start running revenue experiments this week.